728x90

반응형

교차검증 적용으로 최적의 시계열 모델 찾기

지난 포스팅에서 open power system data에 대한 전처리 과정과 데이터 visualization을 해보았다.

2021.04.15 - [Programming/Time series forecasting] - Time series 분석 I: importing and plotting data

이번 포스팅에서는 지난 포스팅에서 다듬은 최종 데이터로부터, cross-validation을 이용해 time series 예측의 최적화 모델을 찾는 방법을 살펴 보도록 하겠다. 이 과정은 참고 링크의 포스팅을 참고 하였다.

위 링크의 포스팅에서 최종적으로 다듬은 데이터는 다음과 같다.

display(df)

data_consumption = data.loc[:,['Consumption']]

data_consumption['Yesterday'] = data_consumption.loc[:,['Consumption']].shift()

data_consumption.loc[:,'Yesterday'] = data_consumption.loc[:,['Consumption']].shift()

data_consumption.loc[:,'Yesterday_Diff'] = data_consumption.loc[:,['Yesterday']].diff()

data_consumption = data_consumption.dropna()

data_consumption

Set train and test dataset by splitting data_consumption

X_train = data_consumption[:'2016'].drop(['Consumption'], axis=1)

y_train = data_consumption.loc[:'2016', 'Consumption']

X_test = data_consumption['2017'].drop(['Consumption'], axis=1)

y_test = data_consumption.loc['2017', 'Consumption']

Helper function: performance metrics

import sklearn.metrics as metrics

def regression_results(y_true, y_pred):

# Regression metrics

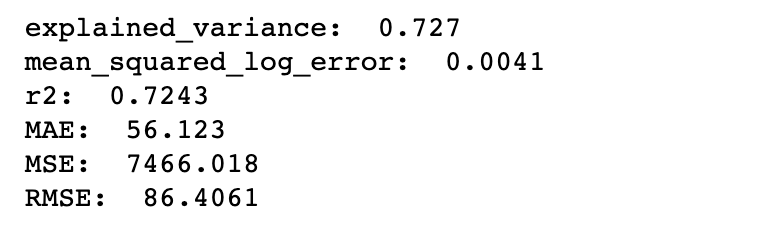

explained_variance=metrics.explained_variance_score(y_true, y_pred)

mean_absolute_error=metrics.mean_absolute_error(y_true, y_pred)

mse=metrics.mean_squared_error(y_true, y_pred)

mean_squared_log_error=metrics.mean_squared_log_error(y_true, y_pred)

median_absolute_error=metrics.median_absolute_error(y_true, y_pred)

r2=metrics.r2_score(y_true, y_pred)

print('explained_variance: ', round(explained_variance,4))

print('mean_squared_log_error: ', round(mean_squared_log_error,4))

print('r2: ', round(r2,4))

print('MAE: ', round(mean_absolute_error,4))

print('MSE: ', round(mse,4))

print('RMSE: ', round(np.sqrt(mse),4))

Cross-validation 전략

a. Time-series cross validation (tscv)

from sklearn.linear_model import LinearRegression

from sklearn.neural_network import MLPRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.svm import SVR

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import cross_val_predict

from sklearn.metrics import r2_score

# Spot Check Algorithms

models = []

models.append(('LR', LinearRegression()))

models.append(('NN', MLPRegressor(solver = 'lbfgs'))) #neural network

models.append(('KNN', KNeighborsRegressor()))

models.append(('RF', RandomForestRegressor(n_estimators = 10)))

# Ensemble method - collection of many decision trees

models.append(('SVR', SVR(gamma='auto'))) # kernel = linear

# Evaluate each model in turn

results = []

names = []

for name, model in models:

# TimeSeries Cross validation

tscv = TimeSeriesSplit(n_splits=10)

cv_results = cross_val_score(model, X_train, y_train, cv=tscv, scoring='r2')

results.append(cv_results)

names.append(name)

print('%s: %f (%f)' % (name, cv_results.mean(), cv_results.std()))

# Compare Algorithms

plt.boxplot(results, labels=names)

plt.title('Algorithm Comparison')

plt.show()

b. Blocked cross-validation (btscv)

class BlockingTimeSeriesSplit():

def __init__(self, n_splits):

self.n_splits = n_splits

def get_n_splits(self, groups):

return self.n_splits

def split(self, X, y=None, groups=None):

n_samples = len(X)

k_fold_size = n_samples // self.n_splits

indices = np.arange(n_samples)

margin = 0

for i in range(self.n_splits):

start = i * k_fold_size

stop = start + k_fold_size

mid = int(0.8 * (stop - start)) + start

yield indices[start: mid], indices[mid + margin: stop]# Spot Check Algorithms

models = []

models.append(('LR', LinearRegression()))

models.append(('NN', MLPRegressor(solver = 'lbfgs', max_iter=2000))) #neural network

models.append(('KNN', KNeighborsRegressor()))

models.append(('RF', RandomForestRegressor(n_estimators = 10)))

# Ensemble method - collection of many decision trees

models.append(('SVR', SVR(gamma='auto'))) # kernel = linear

# Evaluate each model in turn

results = []

names = []

for name, model in models:

# blocked Cross-validation

btscv = BlockingTimeSeriesSplit(n_splits=10)

cv_results = cross_val_score(model, X_train, y_train, cv=btscv, scoring='r2')

results.append(cv_results)

names.append(name)

print('%s: %f (%f)' % (name, cv_results.mean(), cv_results.std()))

# Compare Algorithms

plt.boxplot(results, labels=names)

plt.title('Algorithm Comparison (blocked CV)')

plt.show()

Finding optimal forecasting model using GridSearchCV

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import make_scorer

def rmse(actual, predict):

predict = np.array(predict)

actual = np.array(actual)

distance = predict - actual

square_distance = distance ** 2

mean_square_distance = square_distance.mean()

score = np.sqrt(mean_square_distance)

return score

rmse_score = make_scorer(rmse, greater_is_better = False)

model = RandomForestRegressor()

param_search = {

'n_estimators': [20, 50, 100],

'max_features': ['auto', 'sqrt', 'log2'],

'max_depth' : [i for i in range(5,15)]

}

tscv = TimeSeriesSplit(n_splits=10)

gsearch = GridSearchCV(estimator=model, cv=tscv, param_grid=param_search, scoring = rmse_score)

gsearch.fit(X_train, y_train)

best_score = gsearch.best_score_

best_model = gsearch.best_estimator_

# checking the best model performance on test data

y_true = y_test.values

y_pred = best_model.predict(X_test)

regression_results(y_true, y_pred)

References

728x90

반응형

'Programming > Time series forecasting' 카테고리의 다른 글

| (Data scientist 인터뷰) Time series forecasting 예제 (0) | 2021.05.09 |

|---|---|

| 시계열 모델의 교차검증 (cross-validation) 전략 (파이썬 코드 포함) (0) | 2021.05.04 |

| Time series 분석 I: importing and plotting data (0) | 2021.04.15 |

댓글